#Angular2 wavesurfer js code

Timing is controlled with high precision and low latency, allowing developers to write code that responds accurately to events and is able to target specific samples, even at a high sample rate. Connect the sources up to the effects, and the effects to the destination.Choose final destination of audio, for example your system speakers.Create effects nodes, such as reverb, biquad filter, panner, compressor.Inside the context, create sources - such as, oscillator, stream.This last connection is only necessary if the user is supposed to hear the audio.Ī simple, typical workflow for web audio would look something like this: Once the sound has been sufficiently processed for the intended effect, it can be linked to the input of a destination ( stination), which sends the sound to the speakers or headphones. A common modification is multiplying the samples by a value to make them louder or quieter (as is the case with GainNode). Outputs of these nodes could be linked to inputs of others, which mix or modify these streams of sound samples into different streams.

In fact, sound files are just recordings of sound intensities themselves, which come in from microphones or electric instruments, and get mixed down into a single, complicated wave. These could be either computed mathematically (such as OscillatorNode), or they can be recordings from sound/video files (like AudioBufferSourceNode and MediaElementAudioSourceNode) and audio streams ( MediaStreamAudioSourceNode).

Sources provide arrays of sound intensities (samples) at very small timeslices, often tens of thousands of them per second. They typically start with one or more sources. This modular design provides the flexibility to create complex audio functions with dynamic effects.Īudio nodes are linked into chains and simple webs by their inputs and outputs. Several sources - with different types of channel layout - are supported even within a single context. Basic audio operations are performed with audio nodes, which are linked together to form an audio routing graph. The Web Audio API involves handling audio operations inside an audio context, and has been designed to allow modular routing.

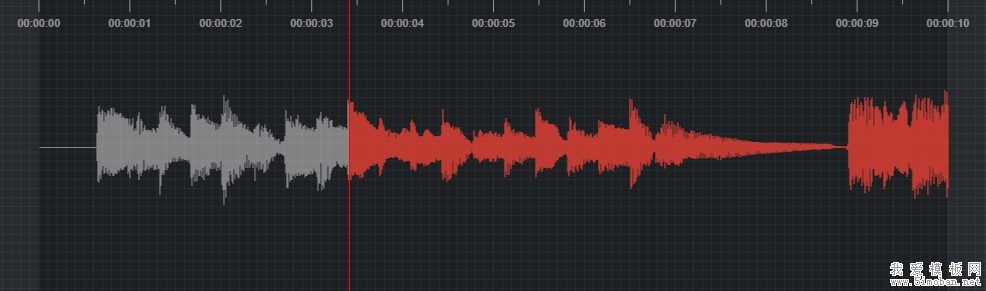

For whatever reason it responds properly when clicking play or trying to play a different track that I have already loaded. I tried locating where things are going wrong and it seems to me that the function basically pauses as it hits the. When loading the page in iOS the waveforms dont display, and then only fade in when I click my play button.

#Angular2 wavesurfer js android

This is works extremely well on computers and Android phones, however it does not fully work on iOS. So Im using AJAX to create instances of the wavesurfer class through an AJAX request and loading the file as a MediaElement.

0 kommentar(er)

0 kommentar(er)